What are the 3 Main Elements Every Investor Should Know When Investing in a Biotech Company Producing a New Drug?

Let me paint you a picture. You're sitting at your desk, scrolling through endless biotech pitch decks, each one promising to cure cancer, reverse aging, or solve whatever medical crisis is trending that week. The CEOs are charming, the science sounds revolutionary, and the potential returns? Astronomical. But here's the thing – most of these companies will fail spectacularly, and I'm not talking about a gentle decline. I'm talking about the kind of failure that makes your portfolio look like it went through a wood chipper. So before you throw your hard-earned money at the next "breakthrough" therapy, let me share the holy trinity of biotech investing that could save you from financial ruin.

The biotech sector is a fascinating beast. It's where brilliant minds meet desperate hope, where science fiction becomes science fact, and where fortunes are made and lost faster than you can say "Phase 3 trial failure." I've spent years analyzing these companies, watching some soar to unimaginable heights while others crashed and burned despite having what seemed like promising technologies. Through all this chaos, I've learned that there are three fundamental elements that separate the winners from the losers, the diamonds from the rough, the actual breakthroughs from the elaborate scientific theater. These aren't secrets hidden in some vault – they're right there in the clinical data, if you know how to read between the lines.

Let's start with safety, the unsung hero of drug development. Now, I know what you're thinking – safety sounds boring, like wearing a helmet while riding a bicycle. But in the biotech world, safety is where dreams go to die. Here's the uncomfortable truth: when we're dealing with serious illnesses, we accept that drugs will have side effects. The question isn't whether a drug is perfectly safe – nothing is – but whether the damage it causes is worth the benefit it provides. This calculation gets ethically murky fast. A cancer drug that causes hair loss? No problem. One that causes liver damage? Well, if it's saving lives, maybe we can work with that. But when the side effects start killing patients faster than the disease itself, you've got a problem that no amount of clever marketing can fix.

The safety profile of a drug is like a tightrope walk over a pit of hungry lawyers. Companies need to demonstrate that their treatment won't turn patients into statistics, but they also can't be so conservative that their drug becomes useless. I've seen promising compounds fail because they caused unexpected heart problems in a small percentage of patients. The market doesn't care that 95% of people were fine – those 5% represent lawsuits, regulatory nightmares, and PR disasters. Smart investors dig deep into safety data, looking not just at the headline numbers but at the outliers, the discontinuation rates, and those footnotes that companies hope you'll skip. If a company is dancing around safety questions or burying adverse events in supplementary materials, run for the hills.

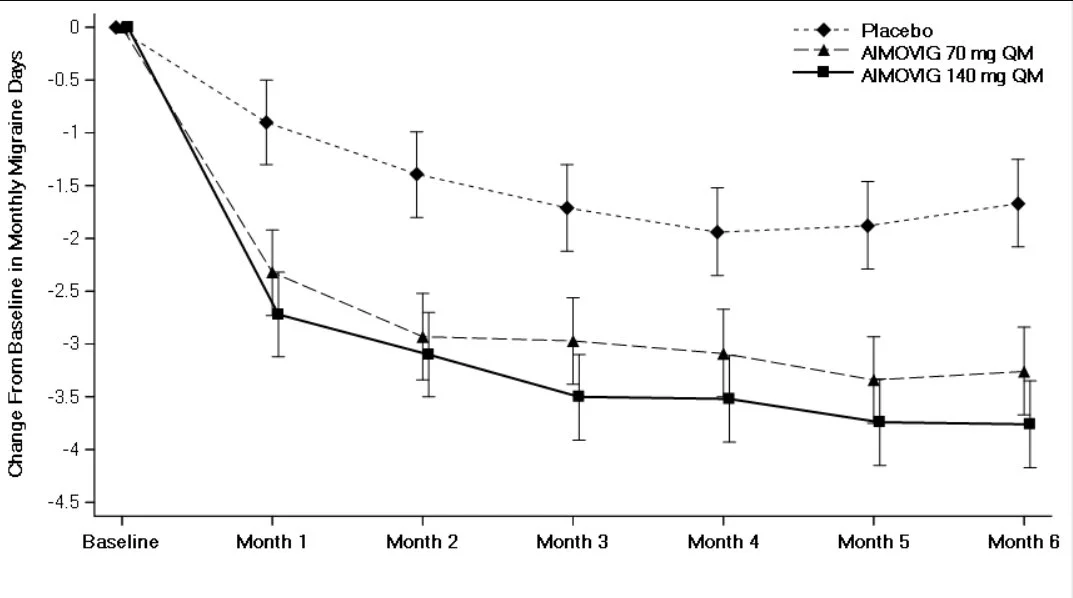

Moving on to efficacy, the showpiece of any biotech presentation. This is where companies love to dazzle you with impressive-sounding percentages and heartwarming patient testimonials. But let me tell you something about efficacy that most retail investors don't understand: it's all relative. When a company trumpets a "50% response rate," that sounds fantastic until you realize the current standard of care has a 45% response rate. Suddenly, that revolutionary breakthrough looks more like a marginal improvement. The magic number I look for? Greater than 10% improvement over the standard of care (SOC). Anything less, and you're essentially trying to convince doctors to switch to your expensive new drug for benefits that might be statistical noise.

The efficacy game is where biotech companies become creative storytellers. They'll slice and dice data until they find a subgroup that responds beautifully to their treatment. "Our drug showed a 73% response rate!" they'll proclaim, conveniently forgetting to mention this was in left-handed patients born on Tuesdays who also happen to have blue eyes. I'm exaggerating, but not by much. The formula is simple: Response rate equals the number of patients who respond divided by the total treated. But watch how companies define "response" – is it complete remission, partial improvement, or just "didn't get worse"? The devil, as always, is in the definitions. When I evaluate efficacy, I want to see clear, meaningful improvements in outcomes that matter to patients, not statistical gymnastics that impress investors who don't know better.

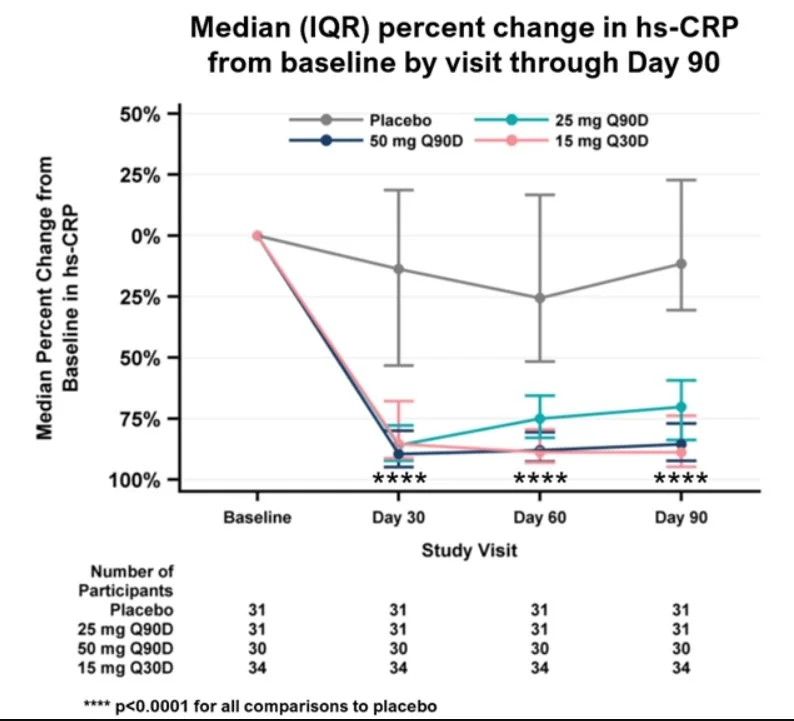

Now we arrive at my favorite element: statistical significance, the mathematics of not fooling yourself. This is where we separate the real science from the wishful thinking. The gold standard is a p-value less than 0.05, meaning there's less than a 5% chance the results happened by pure luck. Sounds reasonable, right? Well, here's where it gets interesting. Companies that are confident in their drugs test them on hundreds of patients across multiple sites. Companies that aren't so sure? They'll test on a handful of carefully selected patients and then manipulate the hell out of those results. I've seen trials with 12 patients claiming breakthrough status. That's not science; that's a magic show.

The statistical significance trap is particularly insidious because it preys on our desire to believe. When a desperate company runs a tiny trial and gets positive results, they'll shout it from the rooftops. But small trials are like flipping a coin five times and getting four heads – impressive, but meaningless. The biotech graveyard is littered with companies that got lucky in Phase 2 with 30 patients, raised hundreds of millions based on those results, then watched their drug fail spectacularly when tested on 300 patients in Phase 3. Real statistical significance comes from large, well-designed trials that test the drug in conditions resembling real-world use. If a company is cherry-picking data, running multiple analyses until something works, or using bizarre statistical methods you've never heard of, they're probably trying to polish a turd.

Here's where these three elements become truly powerful: they work together in ways that can make or break an investment. A drug might be incredibly safe but only marginally effective – great for patients, terrible for profits. Another might be highly effective but with safety issues that limit its use to only the most desperate cases – smaller market, bigger headaches. And then there's the statistical significance that ties it all together. I've seen drugs that looked amazing on safety and efficacy in small trials completely fall apart when properly tested. The companies that succeed are those that nail all three elements with data so clean you could eat off it. They're rare, but when you find one, you've found gold.

So what does this mean for you, the investor standing at the edge of the biotech casino? First, never trust a company that's cagey about any of these three elements. If they're highlighting efficacy but glossing over safety, there's a reason. If they're running tiny trials and making grand pronouncements, they're hoping you won't notice. Second, learn to read clinical trial results like your money depends on it – because it does. Those boring tables and forest plots contain the truth that press releases try to spin. Third, remember that even when all three elements look good, biotech investing remains risky. Regulations change, competitors emerge, and sometimes good drugs fail for reasons nobody saw coming. But by focusing on these fundamentals, you'll avoid the obvious disasters and give yourself a fighting chance at finding the winners. The biotech lottery is rigged, but at least now you know how to read the odds.